Have you ever come across questions like:

- How to make physics and animation work together in Unity3D?

- How to combine physic movement with animation?

- How to animate physics?

- How to physicate animation?

typical questions folks post on forums and get nothing but verbal diarrhea in response

And so on… Gamedev forums are literally flooded with such questions. Well, it means that people deal with it and they have to deal with it. Perhaps, even you had similar situations. And I guess, you found no solution! Perhaps…

Soo, what’s the problem? Shortly: the problem is Unity itself – in 2k19 it still has no apropriate way to combine key-frame animated movement and physically-produced movement. And yes, I’m aware of kinematic (or cinematik) rigid bodies in Unity and ‘Animate Physics’ option in update mode list and so on, but this shit never works or works like a monkey eating its own poop. So, I guess, we need a working tool, which allows to combine animation and physics. I won’t say I’ve invented one, but here’s my approach.

First, we want to be able to have a predefined sequence, where values and other stuff are changed (as we set). For this purpose we can engage Untiy’s animation system, other alternatives are a far more hemorrhoidy. So, we can use the default animation system, but only to modify those values, which are not touched by the physics engine. Second, we can perfrom different tricks on RB component with animation system: we can change rigid body’s mass, its drag coefficient, even set or remove constraints. Sounds neat. However, when we come to changing its position, we would still have nothing to do with it. However, we can do all stuff to other object and somehow link our object to that. For example, we can drive one object with animations and make another object follow it, while being moved by physics forces. Strange, huh? Nope. Ever seen cat being fooled by a laser pointer? I think we can borrow this idea..

Not only funny, but very useful as a concept too

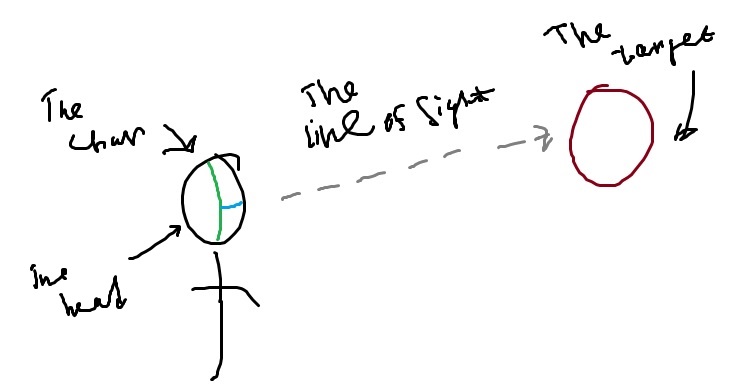

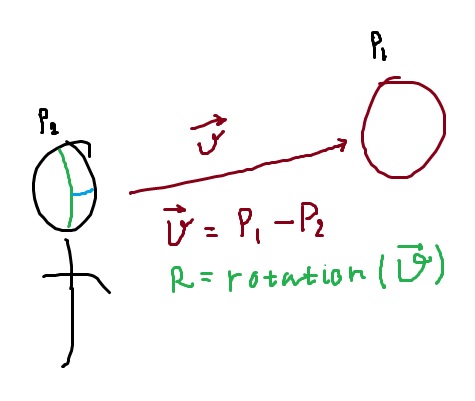

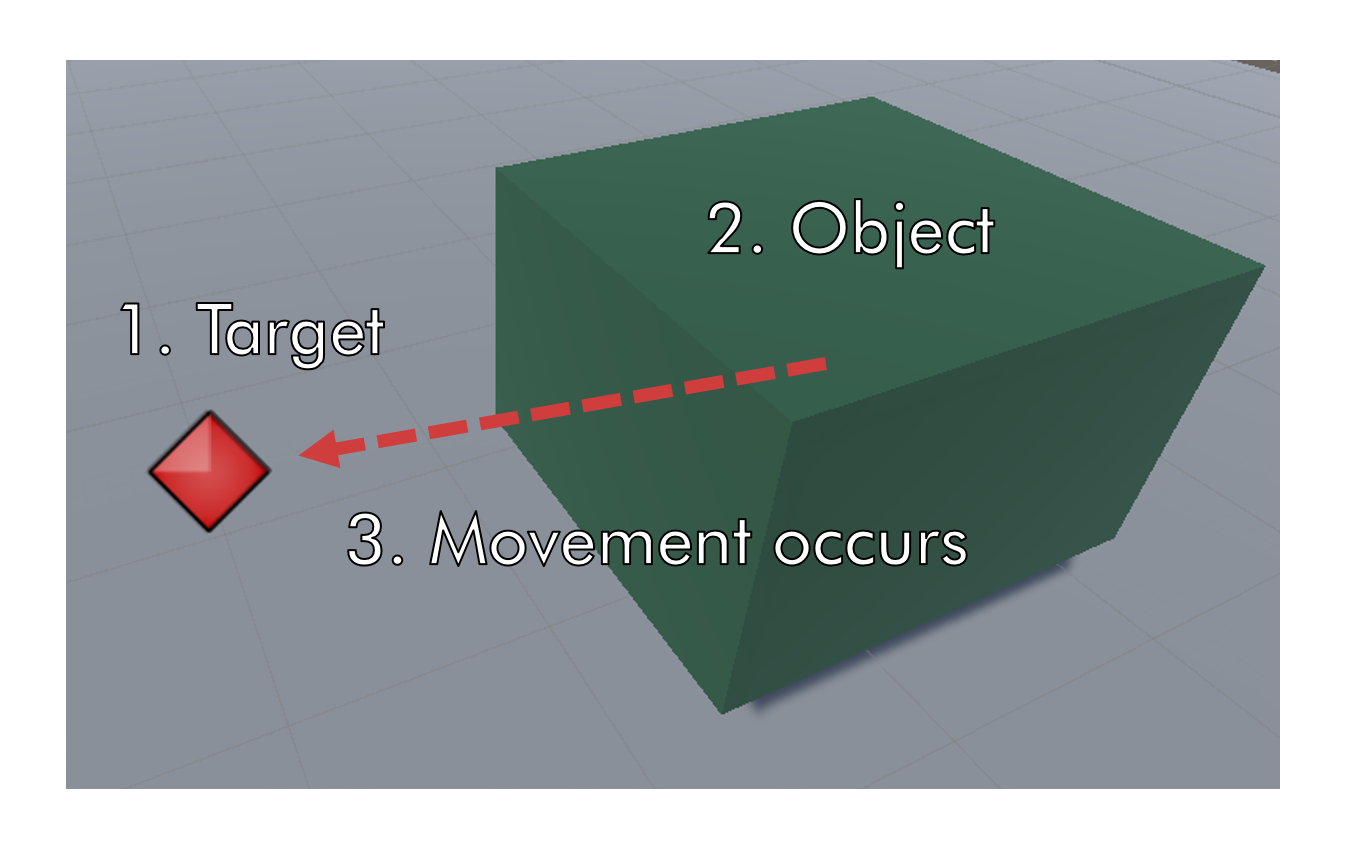

So, basically, we can use some object as a target and another object (which we need to move) will have to move toward this target. This is the easiest case, we’ll only need to know exact distance between an object and the target and then we’ll be able ot apply some amount of force to move the object by this value. Nothing extraordinary here.

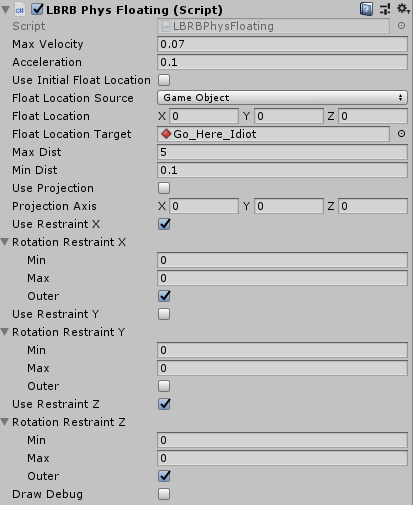

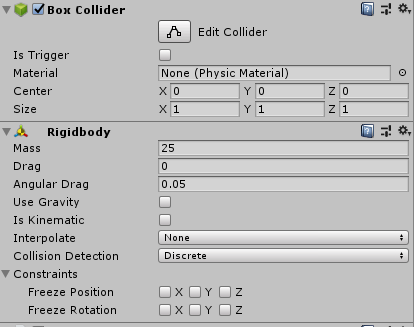

All target’s transformations are performed by an animation sequence. There’s nothing more. Actually, the target is just an empty gameobject with Animatior component attached to it (with coresponding animation sequences). Object’s stuffing looks a bit different. First, the object has a collider and a rigidbody, which allow it to interact in a physically-driven manner. However, it’s not enough. We have to make some custom component (or use existing) to be able to move object. So I made one and called it LBRBPhysFloating.

Component layout for the ‘object’

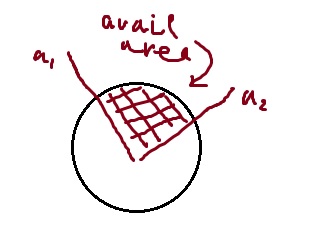

This component may look sophisticated, but all it does is to apply needed amount of forece to the object. The word ‘floating’ stands here for the floating kind of movement, nothing more. Anyways, all the key concepts of this components are the following: we need to find target’s location, and subtract from it our rigidbody’s location, then we need to apply equal amount of force. The corresponding snippet is below, full lisiting is here.

protected void PerformMovement()

{

Vector3 delta;

//Get the distance

delta = GetFloatLocation() - _rigidbody.position;

//Condition statement is not necessary, AddForce only matters here

if (delta.magnitude >= 0.1f)

_rigidbody.AddForce (delta.normalized * Mathf.Clamp (delta.magnitude, 0, MaxVelocity), ForceMode.VelocityChange);

//This strange conditions are made only to allow object to 'float'

if (Vector3.Dot(_rigidbody.velocity.normalized, delta.normalized) < 0)

_rigidbody.AddForce (-_rigidbody.velocity.normalized * Mathf.Clamp

(_rigidbody.velocity.magnitude, 0, MaxVelocity),

ForceMode.VelocityChange);

}

Basically, you can put any code at the top, but the AddForce procedure should be on its place

Of course, everything should be called from the FixedUpdate procedure. Also, you may want to clarify some things like ForceMode and ForceMode.VelocityChange, which does all the physic-based magic and maybe you would like to learn about all the differences between ForceMode.VelocityChangeand FForceMode.Acceleration. This is explained here.

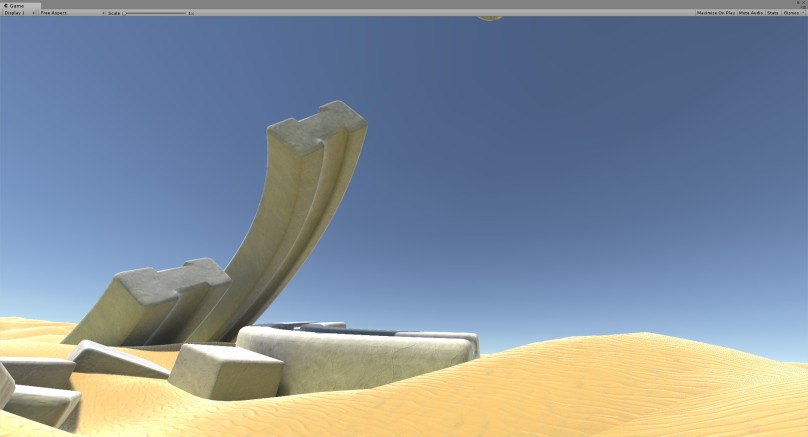

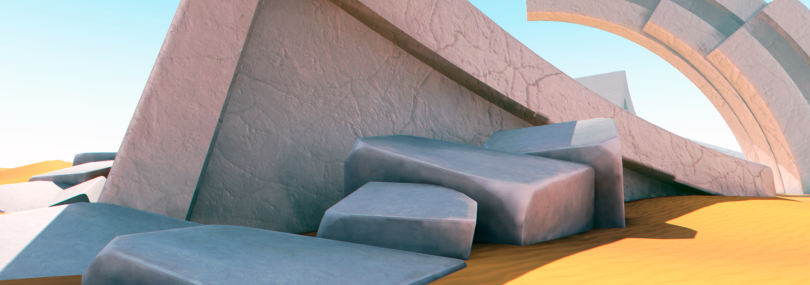

So, what we’ve got? Let’s see. Here I’ve made a simple scene with all necessary content: a green gameobject is our ‘object‘ (the one to move), the red ellipse is our ‘target‘. As I move the target around (in play in editor mode, thanks, Unity), the object follows it. The most important thing here is that the object handles physical interactions with other objects (on the second gif).

So, here it is…

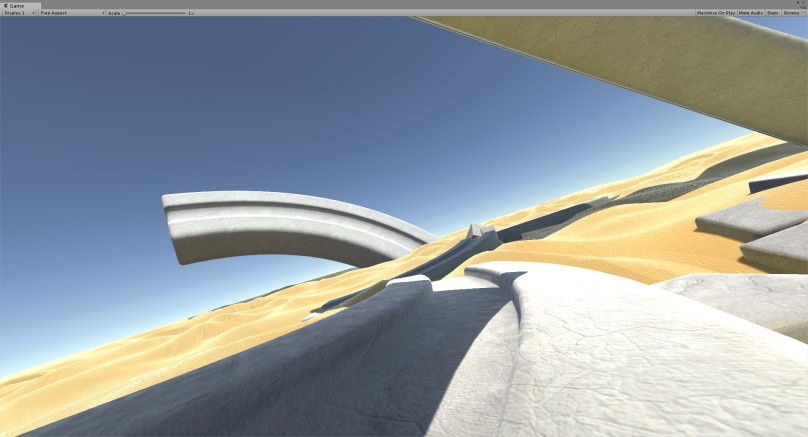

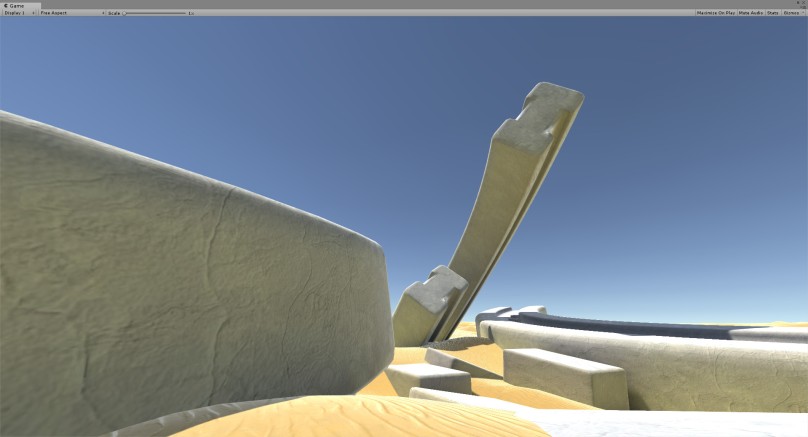

Next important thing is animate the target, so you won’t have to pull it yourself every time of course. In this example I wanted to use a simple, plain, built-in Animator component. Why not? Nothing more, just made a simple trajectory and… And it worked! Yea, it worked nice. I didn’t even expect such a satisfactory result. You can watch the video below to admire.